pySpatial

Generating 3D Visual Programs for Zero-Shot Spatial Reasoning

We introduce pySpatial, a visual programming framework that equips MLLMs agent with the ability to interface with spatial tools via Python code generation.

We introduce pySpatial, a visual programming framework that equips MLLMs agent with the ability to interface with spatial tools via Python code generation.

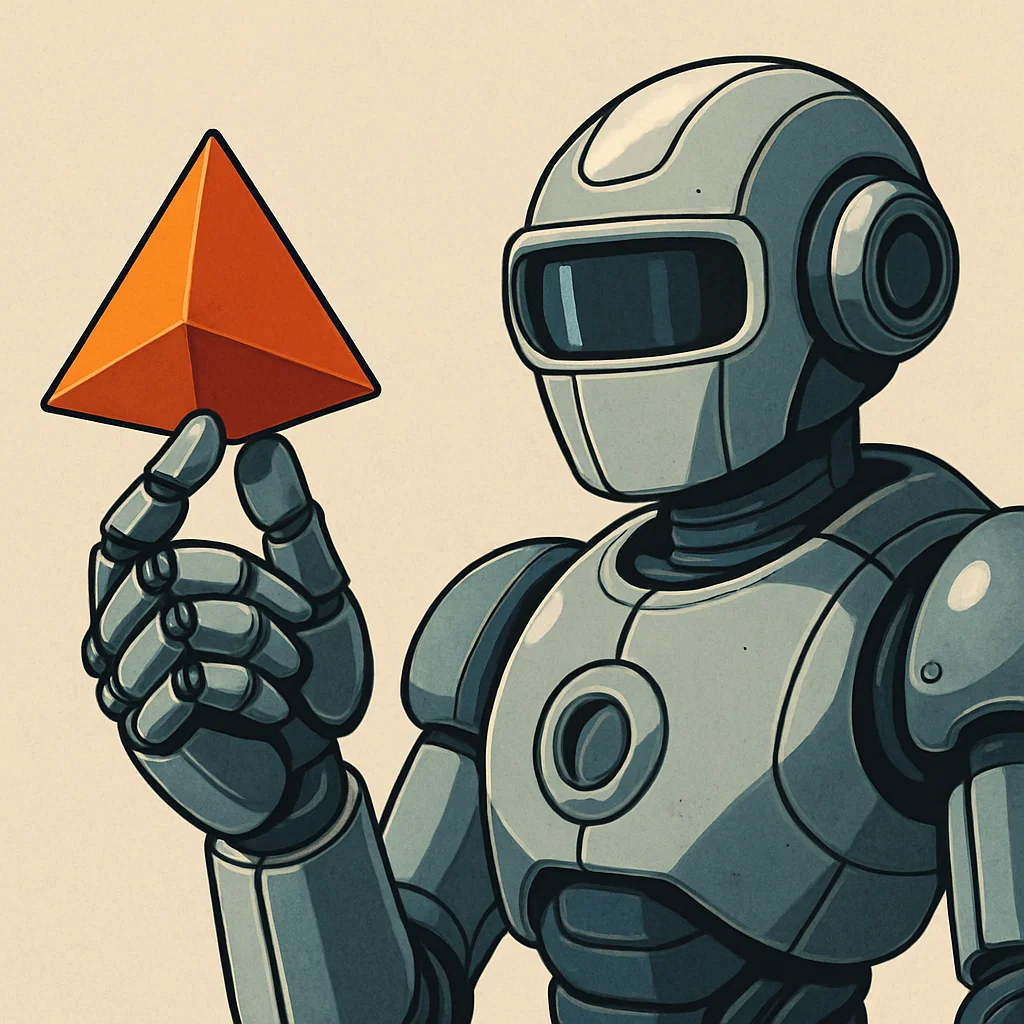

Multi-modal Large Language Models (MLLMs) have demonstrated strong capabilities in general-purpose perception and reasoning, but they still struggle with tasks that require spatial understanding of the 3D world. To address this, we introduce pySpatial, a visual programming framework that equips MLLMs with the ability to interface with spatial tools via Python code generation. Given an image sequence and a natural-language query, the model composes function calls to spatial tools including 3D reconstruction, camera-pose recovery, novel-view rendering, etc. These operations convert raw 2D inputs into an explorable 3D scene, enabling MLLMs to reason explicitly over structured spatial representations. Notably, pySpatial requires no gradient-based fine-tuning and operates in a fully zero-shot setting. Experimental evaluations on the challenging MINDCUBEand OMNI3D-BENCH benchmarks demonstrate that our framework pySpatial consistently surpasses strong MLLM baselines; for instance, it outperforms GPT4.1-mini by 12.94% on MINDCUBE. Furthermore, we conduct real-world indoor navigation experiments where the robot can successfully traverse complex environments using route plans generated by pySpatial, highlighting the practical effectiveness of our approach.

| Model | Performance Metrics | ||||

|---|---|---|---|---|---|

| Method | Reference | Overall | Rotation | Among | Around |

| Baseline Models | |||||

| Qwen2.5-VL-3B-Instruct | Bai et al. (2025) | 37.81 | 34.00 | 36.00 | 45.20 |

| GPT-4o | OpenAI (2024) | 42.29 | 35.00 | 43.00 | 46.40 |

| Spatial Mental Models | |||||

| Chain-of-Thought | Yin et al. (2025) | 40.48 | 32.00 | 36.00 | 58.00 |

| View Interpolation | Yin et al. (2025) | 37.81 | 35.50 | 36.50 | 42.80 |

| Cognitive Map | Yin et al. (2025) | 41.43 | 37.00 | 41.67 | 44.40 |

| Visual Programming Approaches | |||||

| ViperGPT | Suris et al. (2023) | 36.95 | 20.50 | 41.00 | 40.40 |

| VADAR | Marsili et al. (2025) | 40.76 | 33.50 | 40.67 | 46.80 |

| VADAR w/ Recon. | - | 35.62 | 31.00 | 36.83 | 36.40 |

| pySpatial (Ours) | - | 62.35 | 41.83 | 64.89 | 72.67 |

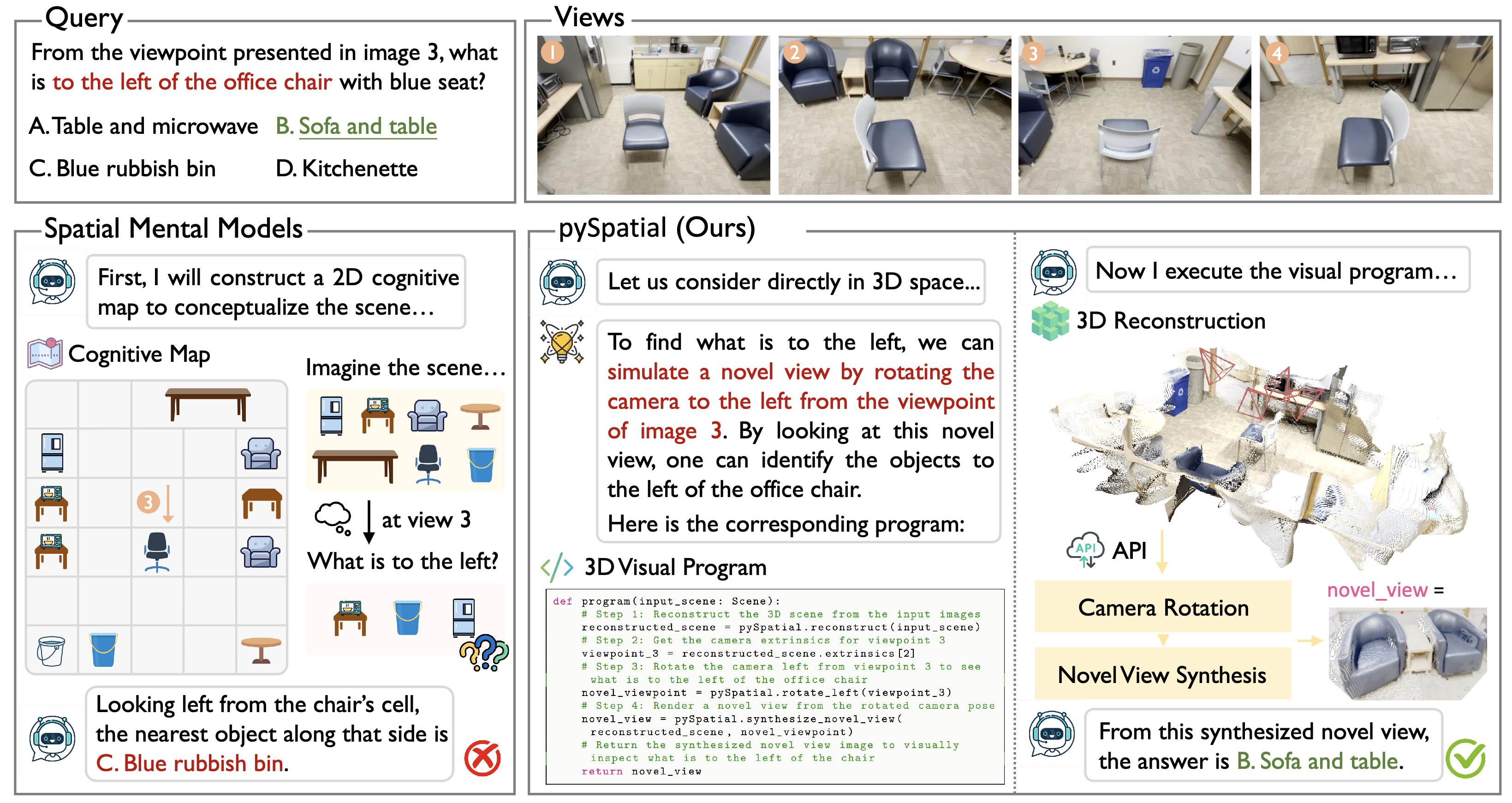

To further illustrate the capabilities of our pySpatial framework, we conduct qualitative experiments on representative examples from the MINDCUBE benchmark. As shown in Figure 2, each query is paired with the generated 3D visual program, the reconstructed 3D scene, the program outputs, and the final response produced by pySpatial. These examples highlight how pySpatial enables MLLMs to reason explicitly within an explorable 3D scene reconstructed from sparse 2D inputs. By synthesizing executable and interpretable visual programs that perform operations such as camera translation, rotation, and novel view synthesis, the framework provides interpretable outputs that ground the reasoning process in geometric evidence. Across diverse spatial reasoning tasks, pySpatial produces responses that closely align with ground-truth annotations, highlighting the effectiveness of our approach. It is worth noting that the generated 3D visual programs include well-structured comments that capture the reasoning process of pySpatial, thereby providing transparency and interpretability that researchers can readily verify, debug, or modify

| Model | Performance Metrics | ||||

|---|---|---|---|---|---|

| Method | Reference | Overall | Rotation | Among | Around |

| Baseline | |||||

| Random (chance) | - | 32.35 | 36.36 | 32.29 | 30.66 |

| Random (frequency) | - | 33.02 | 38.30 | 32.66 | 35.79 |

| Open-Weight Multi-Image Models | |||||

| LLaVA-OneVision-7B | Li et al. (2025) | 47.43 | 36.45 | 48.42 | 44.09 |

| LLaVA-Video-Qwen-7B | Zhang et al. (2025) | 41.96 | 35.71 | 43.55 | 30.12 |

| mPLUG-Owl3-7B-241101 | Ye et al. (2025) | 44.85 | 37.84 | 47.11 | 26.91 |

| InternVL2.5-8B | Chen et al. (2024) | 18.68 | 36.45 | 18.20 | 13.11 |

| Qwen2.5-VL-7B-Instruct | Bai et al. (2025) | 29.26 | 38.76 | 29.50 | 21.35 |

| Qwen2.5-VL-3B-Instruct | Bai et al. (2025) | 33.21 | 37.37 | 33.26 | 30.34 |

| DeepSeek-VL2-Small | Lu et al. (2024) | 47.62 | 37.00 | 50.38 | 26.91 |

| Proprietary Models | |||||

| GPT-4o | OpenAI (2024) | 38.81 | 32.65 | 40.17 | 29.16 |

| GPT-4.1-mini | OpenAI (2025) | 45.62 | 37.84 | 47.22 | 34.56 |

| Claude-4-Sonnet | Anthropic (2025) | 44.75 | 48.42 | 44.21 | 47.62 |

| Specialized Spatial Models | |||||

| RoboBrain | Ji et al. (2025) | 37.38 | 35.80 | 38.28 | 29.53 |

| SpaceMantis | Chen et al. (2024) | 22.81 | 37.65 | 21.26 | 29.32 |

| Spatial-MLLM | Wu et al. (2025) | 32.06 | 38.39 | 20.92 | 32.82 |

| Space-Qwen | Chen et al. (2024) | 33.28 | 38.02 | 33.71 | 26.32 |

| VLM-3R | Fan et al. (2025) | 42.09 | 36.73 | 44.22 | 24.45 |

| pySpatial (Ours) | - | 58.56 | 43.20 | 60.54 | 48.10 |

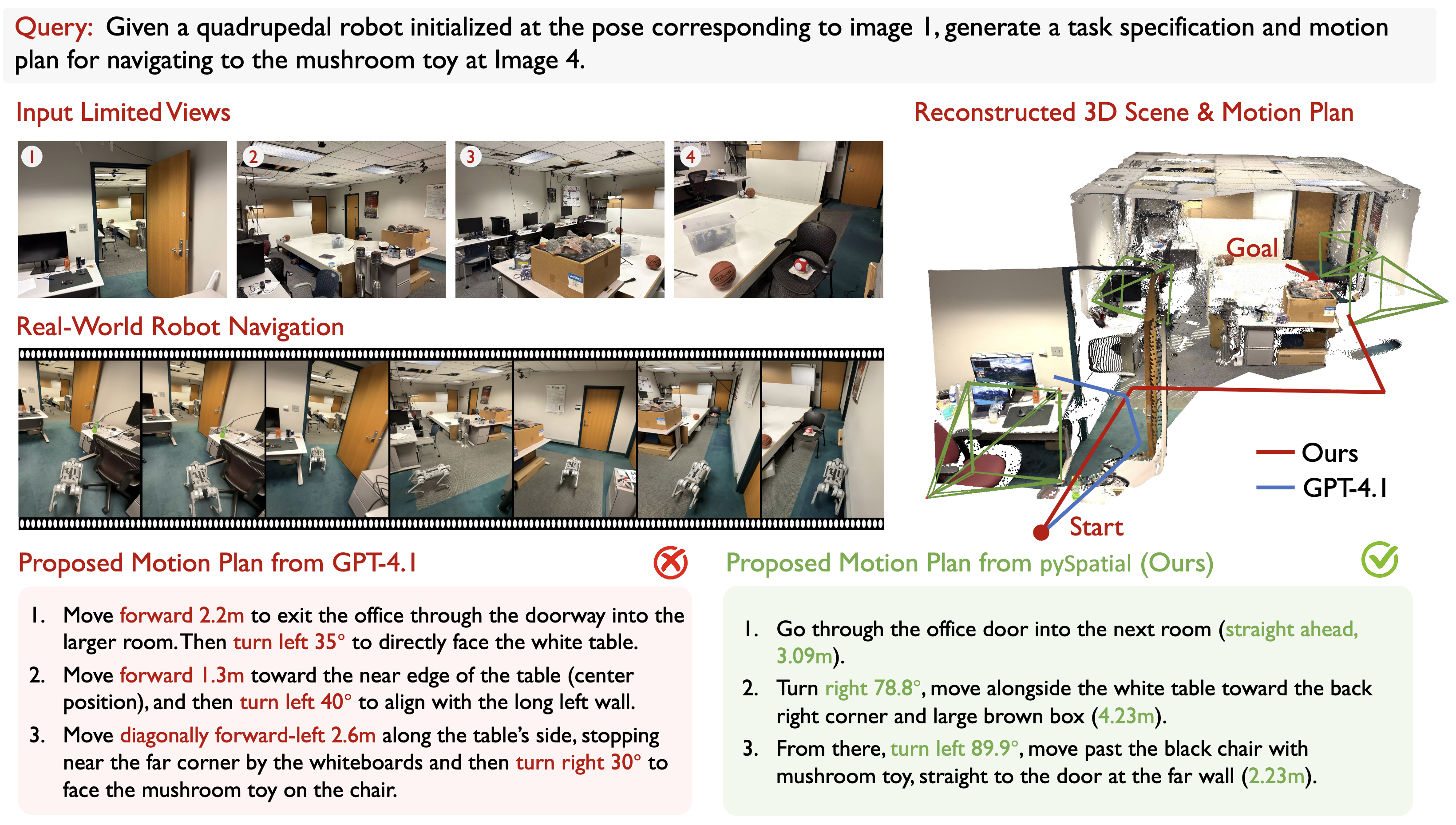

To test the potential of real-world deployment using purely MLLMs, we employ a quadrupedal robot with a velocity-tracking controller in a 50 square meter two-room laboratory. In this setup, the MLLM generates high-level position commands, which are manually converted into temporal velocity targets that the controller tracks, enabling the robot to navigate from an initial pose to a target object (a mushroom toy). From limited 2D views, pySpatial reconstructs an explorable 3D scene, infers camera poses via visual programming, and generates a structured motion plan for the robot to execute.

As shown in Figure 3, our pySpatial successfully guides the robot through doorways, make correct turns, and finally toward the correct goal location. Notably, the MLLM baseline GPT-4.1 struggles to resolve relative direction such as left-right and fails to provide absolute metric distance estimates, leading to navigation errors. In contrast, our agent outputs precise rotations and translations that align with real-world execution, resulting in reliable task completion. This experiment demonstrates that our approach not only produces coherent spatial reasoning in question answering benchmarks, but also transfers effectively to physical robotic platforms for complex indoor navigation tasks.

In this work, we present pySpatial, a visual programming framework that enhance spatial reasoning capabilities of MLLMs through zero-shot Python code generation. By composing functions such as 3D reconstruction and novel-view synthesis, pySpatial converts 2D image sequences into explorable 3D scenes, enabling explicit reasoning in 3D space. Experiments on the MINDCUBEand OMNI3D-BENCH benchmarks demonstrate that pySpatial consistently outperforms strong MLLM baselines, with gains of up to 12.94% on MINDCUBE compared to GPT-4.1-mini. Beyond benchmarks, real-world indoor navigation experiments further validate its practicality, showing that robots can successfully traverse complex environments using route plans generated by pySpatial.